Improving Bird Classification with Unsupervised Sound Separation

| Tom Denton | Scott Wisdom | John R. Hershey |

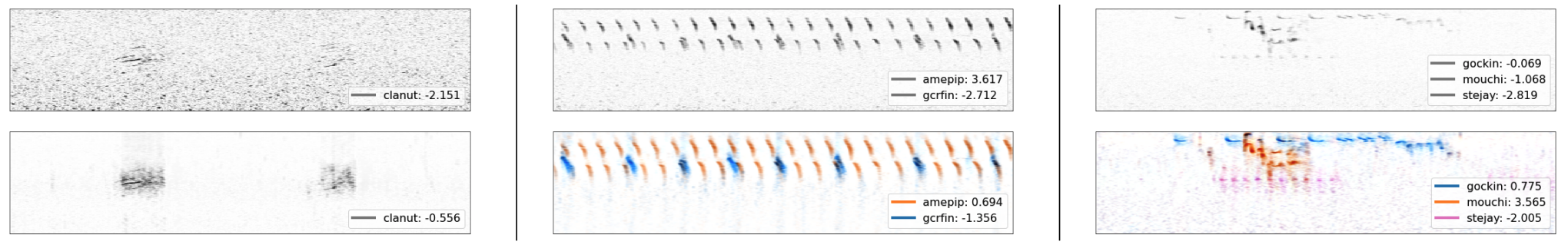

Separate+classify examples. Top plots show PCEN mel-spectrograms of original audio, and bottom plots show PCEN melspectrograms for separated audio, where separated channels are displayed with color-coding. The legend gives the ensemble logits for the ground-truth species. Left: a Clarke’s Nuthatch in the High Sierras dataset, illustrating that simple noise suppression in the separated channel often improves logits for isolated calls with low SNR. Center: a challenging two-source example from the High Sierras dataset. Right: three-species separation from the Caples dataset.

Separate+classify examples. Top plots show PCEN mel-spectrograms of original audio, and bottom plots show PCEN melspectrograms for separated audio, where separated channels are displayed with color-coding. The legend gives the ensemble logits for the ground-truth species. Left: a Clarke’s Nuthatch in the High Sierras dataset, illustrating that simple noise suppression in the separated channel often improves logits for isolated calls with low SNR. Center: a challenging two-source example from the High Sierras dataset. Right: three-species separation from the Caples dataset.

Abstract

This paper addresses the problem of species classification in bird song recordings. The massive amount of available field recordings of birds presents an opportunity to use machine learning to automatically track bird populations. However, it also poses a problem: such field recordings typically contain significant environmental noise and overlapping vocalizations that interfere with classification. The widely available training datasets for species identification also typically leave background species unlabeled. This leads classifiers to ignore vocalizations with a low signal-to-noise ratio. However, recent advances in unsupervised sound separation, such as mixture invariant training (MixIT), enable high quality separation of bird songs to be learned from such noisy recordings. In this paper, we demonstrate improved separation quality when training a MixIT model specifically for birdsong data, outperforming a general audio separation model by over 5 dB in SI-SNR improvement of reconstructed mixtures. We also demonstrate precision improvements with a downstream multi-species bird classifier across three independent datasets. The best classifier performance is achieved by taking the maximum model activations over the separated channels and original audio. Finally, we document additional classifier improvements, including taxonomic classification, augmentation by random low-pass filters, and additional channel normalization.

Paper

"Improving Bird Classification with Unsupervised Sound Separation", |

Blog Post

Google AI Blog: "Separating Birdsong in the Wild for Classification" |

Audio Demos

| • Caples |

| • High Sierras |

| • Sapsucker Woods |

Model Checkpoints

| • Separation model checkpoints on GitHub. |

| • Classification models on GitHub. |

Last updated: May 2022